The AI Interview Score: Why I Trusted a Bot and Still Failed.

I recently interviewed for a challenging Product Marketing role. It was a high-stakes meeting, and afterward, seeking objective reassurance, I did what any modern professional does: I fed the questions, and a summary of my answers, into an AI analysis tool.

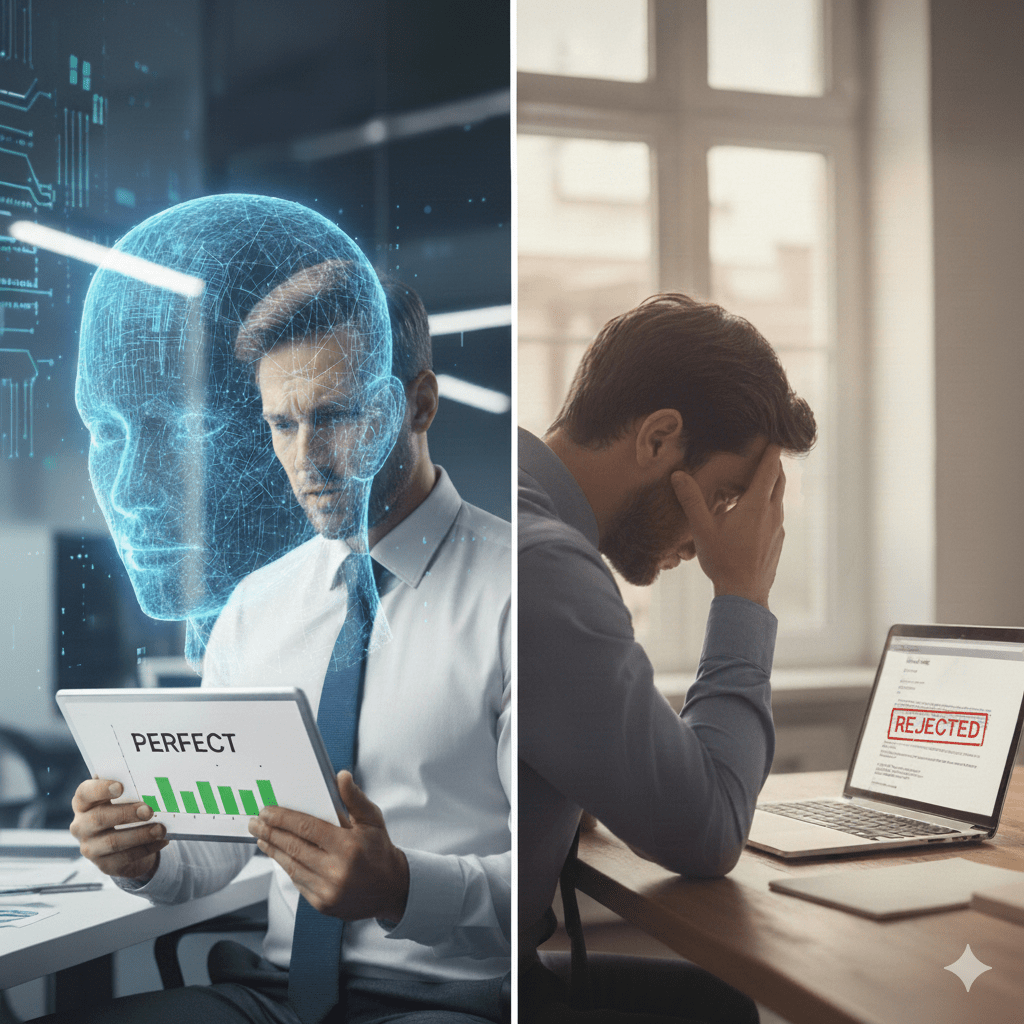

The tool scanned for keywords, assessed structural relevance, and even scored my tone based on the text. The verdict was confident, precise, and highly encouraging. The AI gave me a near-perfect score on competence and effectively told me I was “through the interview.”

But a few hours later, I got the rejection email.

My experience wasn’t just a personal setback; it was a harsh, expensive lesson in what I call the AI Trust Deficit. The algorithm measured my technical qualifications, structure, and keyword density—the ingredients of a perfect answer. It completely missed the genuine connection, the subtle lack of chemistry, and the failure to communicate my passion in a way that resonated with the human being on the other side of the screen.

The Siren Song of Algorithmic Perfection

We are living in an era where AI offers the illusion of total objectivity. Tools promise to remove bias, guarantee efficiency, and deliver the “best” answer based purely on data. This is the AI Mirage: we mistake a complete answer for a correct human decision.

Why did the AI fail? Because the essential elements of an interview—the things that get you hired—are unquantifiable and contextual:

- Sincerity and Presence: Was I truly present, or just reciting optimized talking points?

- Cultural Fit: Did my personality mesh with the interviewer’s style and the company’s ethos?

- Intuition: Did I instinctively understand the interviewer’s unstated need or concern?

AI optimizes for patterns. It doesn’t optimize for trust, rapport, or passion. And when we receive that “perfect” AI score, we unconsciously cede our own critical judgment. We stop asking: What is the machine missing? The danger isn’t that AI is sometimes wrong; it’s that its speed and false certainty make us professionally lazy, atrophying the very skills that make us indispensable

My rejection was a warning. It alerted me to the risk of outsourcing professional judgment to a black box. Now, the critical question is: What core human skill are we losing when we trust the algorithm completely?

Leave a comment