-

The 2026 Communicator’s Manifesto – Originality Over Automation

We’ve dismantled the jargon trap and elevated data with narrative. Now, as we stand in 2026, facing a landscape increasingly shaped by algorithms and automated content, the ultimate question emerges: How do we ensure our message not only cuts through the noise but also leaves an undeniable human imprint?

The answer lies in a simple, yet profound principle: Originality over Automation.

In a world drowning in machine-generated prose and templated responses, the true currency isn’t just information—it’s genuine insight. It’s the fresh perspective, the nuanced observation, the unexpected connection that only a human mind, steeped in experience and critical thought, can forge. This isn’t about shunning AI; it’s about leveraging it as a tool, not letting it become the master of your voice.

I’ve seen the allure of the quick fix, the tempting promise of instant content. But just like those “icebreaker scripts” we discussed earlier, automated content often lacks soul. It’s generic. It’s forgettable. And in a world where everyone has access to the same generative tools, “generic” is the new invisible.

Your competitive edge, whether in leadership, entrepreneurship, or any domain where ideas matter, isn’t in replicating what a machine can do. It’s in delivering what it cannot: the specific, the authentic, the deeply considered thought that carries the weight of your unique perspective.

This is a proposed manifesto for impact:

- Champion Clarity: Don’t just speak; make yourself understood.

- Craft Narratives: Don’t just present facts; tell compelling stories.

- Cultivate Originality: Don’t just churn out content; deliver distinct insights.

In an age of endless information, the greatest skill isn’t finding answers, but asking better questions. It’s in connecting disparate dots, challenging assumptions, and articulating a vision that resonates because it’s unmistakably yours.

So, as we move forward, let’s not just communicate. Let’s engineer clarity, infuse our narratives with genuine conviction, and stand firm in the belief that human originality will always be the most powerful force in a world of noise.

-

The Data vs. The Story – Why Numbers Need a Narrative

We’ve established that clarity trumps jargon. But clear words alone aren’t enough if your argument still lacks punch. What happens when you’ve got all the facts lined up, perfectly articulated, yet your audience remains unmoved? Often, it’s because you’ve presented data as a collection of isolated facts, rather than the compelling story it truly is.

Think about any great pitch, any successful campaign, any movement that captured hearts and minds. It wasn’t just about the raw numbers; it was about the narrative those numbers supported. A client doesn’t simply buy a product’s specifications; they buy the story of transformation it promises. Investors don’t just see spreadsheets; they envision the story of growth and impact.

I’ve witnessed brilliant analyses get lost because the data was presented in a vacuum. Staring at graphs, charts, and statistical summaries, the presenter was convinced the “truth” was obvious. But the truth, unadorned, is often inert. It’s our job, as communicators and leaders, to breathe life into it. To reveal the challenge, the surprising insight, the ultimate potential embedded within those numbers.

Consider the difference:

- “Our Q3 sales saw a 15% increase year-over-year.” (Fact)

- “Following a strategic pivot, our team not only overcame market headwinds but generated a remarkable 15% surge in Q3 sales, setting the stage for aggressive expansion into new territories.” (Story)

The second statement doesn’t dilute the factual integrity; it elevates it. It invites the audience into the journey, showing them why this 15% matters, what it signifies for the future, and who made it happen. This isn’t about fabricating; it’s about illuminating significance, transforming “so what?” into “wow!”

Your project, your product, your vision—it’s all a grand narrative waiting to be told. You began with a problem, embarked on a quest for solutions, faced hurdles, and unearthed insights. Your data points are merely the evidence along that path. Don’t just show the evidence; guide your audience through the entire unfolding drama. Make them feel the urgency, the excitement of the findings, and the profound implications of your conclusions.

Numbers don’t move people; narratives do. Let your data find its voice, and then watch your ideas resonate and inspire.

-

The Jargon Trap – Why Obscurity Isn’t Intelligence

Remember those pre-programmed sales scripts we often encounter? The ones filled with buzzwords and corporate-speak that feel designed to impress, but only manage to alienate? That’s the “jargon trap” in action. It’s not just in sales; it infiltrates every corner of communication, from boardrooms to blog posts, making simple ideas needlessly complex.

Here’s the stark truth: Obscurity isn’t intelligence; it’s often a shortcut, or worse, a hiding place.

When you truly understand something, you don’t need to dress it up in convoluted language. You can distill it to its essence. You can explain it to anyone, regardless of their background. The jargon itself isn’t the knowledge; it’s merely a secret handshake for those already inside the club. Relying on it exclusively means you’re only ever speaking to an echo chamber, missing out on the vast majority of potential connections and insights.

I’ve seen it countless times in the business world, in tech, even in casual conversation—the fear that if you simplify, you’ll sound less sophisticated, less “expert.” This fear is a barrier. It chokes off innovation, prevents collaboration, and keeps genuinely brilliant ideas from gaining the traction they deserve.

The real power player isn’t the one who can recite an obscure dictionary; it’s the one who can take a sprawling, complex challenge and articulate its core with elegant precision. That’s the person who cuts through the noise, who inspires action, and who truly makes an impact.

Let’s strip away the pretense. Let’s champion clarity as a strength, not a weakness. Because the moment you can explain your ‘why’ without needing a specialized vocabulary, that’s when your message truly breaks through. That’s when it stops being a monologue and starts becoming a movement.

-

The Human Differentiator – Cultivating Intellectual Agility in an AI-Augmented World

The pressing question that keeps forward-thinking professionals like yourself awake at night—”What can I do differently and uniquely to keep the conversation interesting and dynamic, rather than repeating the same script?”—is precisely the research inquiry that defines the post-script era. In 2026, with artificial intelligence now capable of generating sophisticated scripts, synthesizing vast data, and even mimicking emotional tonality, the value of the human salesperson isn’t in what they say, but in how they think and respond. The true differentiator is intellectual agility.

To move beyond the repetition of scripts, the modern sales professional must embrace the role of a Cognitive Architect. This means shifting from merely delivering information to actively helping clients make sense of their own complex internal landscapes. Rather than adhering to a pre-defined narrative, the agile seller identifies the client’s underlying “schema”—the mental framework they use to understand their problems and potential solutions. The goal is not to force the client into the seller’s schema, but to dynamically adapt, adjust, and even co-create a new, more effective schema with the client in real-time.

This requires a mastery of Recursive Inquiry. When a client asks a follow-up question, the inclination of a script-bound salesperson might be to pivot back to their pre-set agenda. However, the intellectually agile professional recognizes this as an invitation to deepen the rabbit hole. This involves:

- Acknowledge and Validate: Explicitly recognizing the client’s question (“That’s a sophisticated question, and it tells me you’re thinking about [X]… Most people overlook that specific detail…”). This validates their intelligence and investment.

- Bridge and Co-Create: Using their question as a springboard to explore deeper implications or offer bespoke pathways (“Based on that, would it be more helpful to talk about [Option A] or [Option B] next?”). This transforms the seller into a strategic partner, collaboratively charting the course of discovery.

The inherent dynamism of this approach is what no AI can fully replicate today. While AI can process information, it struggles with the nuanced, empathetic understanding of human context, emotion, and the subtle cues that signal genuine trust or emergent need. The human element, therefore, becomes the “new gold standard”—not for its ability to flawlessly execute a pre-written dialogue, but for its capacity to be authentically present, to adapt spontaneously, and to forge genuine human-to-human connection.

Ultimately, the future of high-stakes negotiation lies not in better scripts, but in cultivating better human minds. This evolution transforms the salesperson from a mere presenter of solutions into a Co-Creator of Clarity, an individual whose unique value lies in their ability to navigate ambiguity, synthesize complex information on the fly, and build bespoke pathways to understanding. This profound shift from “scripting to sensemaking” is precisely the fertile ground for doctoral-level inquiry, opening avenues to explore how such intellectual agility can be cultivated, measured, and optimized to accelerate trust and drive meaningful outcomes in an increasingly automated world.

-

The Pivot to Inquiry – Recognizing the “Follow-Up Question” as the True Ignition of Trust

The demise of the sales script leaves a void, but it is precisely in this vacuum that authentic, high-value communication finds its opportunity to thrive. For the astute sales professional, the shift away from monologues isn’t a loss of structure but an invitation to cultivate intellectual agility and responsive presence. The true “icebreaker” in 2026 is no longer a pre-written opening statement, but the moment a prospect transitions from passive listening to active engagement—a phenomenon most clearly signaled by the emergence of follow-up questions.

As you astutely observed, Drake, the asking of follow-up questions marks a critical psychological and relational pivot. It signifies that the client has moved beyond mere reception of information and has become a co-investigator in the conversation. This isn’t just a casual query; it’s an “interest flag,” a conscious decision by the client to invest their cognitive energy in deepening their understanding. From an academic perspective, this is a clear demonstration of information-seeking behavior, where the client actively attempts to resolve perceived ambiguities or explore areas of heightened personal relevance.

This shift represents a fundamental realignment of power dynamics within the interaction. In a scripted environment, the seller holds the agenda, dictating the flow and content. When a client poses a follow-up question, they are subtly, yet powerfully, taking control of the agenda. They are directing the conversation to their immediate concerns, their priorities, and their specific informational gaps. For the responsive seller, this is invaluable data, precisely indicating where the client’s “pain points” or “value perceptions” truly lie.

A script, by its very design, aims to prevent such “interruptions,” prioritizing linear progression over emergent relevance. However, for the discerning professional, these “interruptions” are not deviations; they are the most valuable data points available. They reveal the client’s mental map, their underlying assumptions, and the precise angles that resonate most deeply. To pivot effectively, the salesperson must possess the “Dynamism” you highlighted – the capacity to seamlessly integrate the client’s emergent questions into the ongoing dialogue, fostering a truly “two-way traffic” conversation.

This dynamic interaction is not linear but transactional. Unlike a static script that risks ending the conversation if the client doesn’t follow a predetermined path, a dynamic dialogue operates as a continuous feedback loop. Every follow-up question feeds into the next insight, allowing the seller to iteratively refine their understanding and tailor their responses in real-time. This recursive process builds a stronger, more resilient relational bridge, as the client experiences being truly heard and understood. It accelerates the “Speed to Trust,” precisely because the interaction is co-created and genuinely responsive, moving from relational uncertainty to collaborative inquiry.

-

The Monologue’s Demise – Why the “Icebreaker Script” Suffocates High-Stakes Sales in 2026

In the increasingly complex world of 2026, the traditional sales script, once revered as the reliable “icebreaker,” has evolved from a foundational tool into a critical liability. What was designed to initiate conversation now actively obstructs genuine engagement, particularly in high-stakes B2B environments. This isn’t merely an operational inefficiency; it’s a fundamental breakdown in communicative efficacy, directly impacting trust formation and long-term client relationships.

The prevailing organizational adherence to standardized scripts often stems from a deeply ingrained managerial fear: the fear of losing control over messaging, brand consistency, and perceived performance metrics. Managers often cling to the script as a safety net, an assured starting point that promises a predictable trajectory for client interactions. However, this perspective fundamentally misunderstands the contemporary client. In an era saturated with information, where prospects leverage sophisticated AI tools for preliminary research, the client is no longer a passive recipient of data. They are informed, discerning, and acutely sensitive to anything that smacks of inauthenticity.

The critical flaw in the “icebreaker script” philosophy is its inherent egocentricity. A script is designed for the speaker’s comfort—to guide their words, to manage their anxieties, and to ensure their points are covered. It inherently neglects the listener’s immediate needs, interests, and prior knowledge. When a salesperson rigidly follows a pre-determined sequence of questions or statements, they inadvertently signal a lack of genuine interest in the client’s unique context. This immediately creates a “relational void,” where the client perceives the interaction as a broadcast rather than a dialogue, diminishing the potential for a meaningful connection before it even begins.

This dynamic becomes particularly detrimental in B2B scenarios where the stakes are high, and solutions are rarely off-the-shelf. Here, the client is typically seeking a strategic partner to navigate internal complexities, solve intricate problems, or achieve ambitious objectives. They are not looking for a recitation of features or a pre-packaged pitch. They require a bespoke intellectual engagement, a collaborative exploration of possibilities. A script, by its very nature, impedes the agility required for such an exchange, forcing the conversation into a rigid, linear model that fails to adapt to the dynamic and often unpredictable flow of genuine human interaction.

The illusion of control offered by scripts ultimately undermines the very objective they seek to achieve: influence. By prioritizing standardized delivery over responsive engagement, organizations inadvertently train their sales teams to listen for cues that align with the next line in the script, rather than truly listening for the unspoken needs or emergent insights from the client. This leads to missed opportunities, prolonged sales cycles, and a pervasive sense of disconnect that, in 2026, can easily send a prospect to a competitor who values genuine, unscripted human connection.

-

The Bot-Trap

How to Stay Human in a World of Automated Intelligence

We’ve reached a strange crossroads in 2026. We have AI that can draft a perfect contract, AI that can forecast supply chain disruptions, and AI that can write a sales pitch that sounds more “human” than most humans.

But here is the danger I’ve observed throughout my career: If we let the machine do the heavy lifting, our own strategic muscles begin to wither.

I call this Professional Atrophy. And the “Bot-Trap” is the final boss of the modern professional journey.

The 80/20 Rule of Human Intelligence

To avoid the trap, we must apply a ruthless filter to our work. I call it the 80/20 Rule of Human Resource.

- The 80% (The Clutter): This is the routine, the data-crunching, the scheduling, and the “Variance Corridor” filtering. We must delegate this to the machines. Not because we are lazy, but because our time is too valuable for the “noise.”

- The 20% (The Mastery): This is where the empire is built. It’s the high-stakes negotiation, the “Black Swan” event that the data didn’t predict, and the nuanced “Trust Deficit” that only one human can bridge with another.

Accountability is the Only Moat

In my PhD research, I’m exploring how “The Variance Corridor” isn’t just about efficiency—it’s about Accountability. A bot cannot be held accountable. It doesn’t lose sleep over a broken supply chain or a failed product launch. As a leader, your “Moat”—your protection against being replaced—is your willingness to stand behind the 20%.

When the AI flags an outlier (that +/- 10% variance we discussed), it is handing you the baton. That outlier is where the profit is hidden, and it’s where the risk is managed. If you don’t have the “Head” and the “Hand” to handle that 20%, you aren’t a strategist; you’re just an expensive system monitor.

Staying “Hungry and Foolish”

Steve Jobs’ mantra has never been more relevant. Staying “Hungry” in 2026 means being hungry for the complex, messy problems that don’t have a clear data set. Staying “Foolish” means being willing to trust your gut—your Synthesized Intuition—even when the AI suggests a safer, more “average” path.

Conclusion: The New Empire Builders

The journey from a “Smooth Talker” (Part 1) to a “Value Architect” (Part 2) ends here: with a human who uses AI to amplify their reach, not replace their brain.

We don’t need to fear the “Bot-Trap” if we are the ones designing the cage. By focusing on Value Engineering, respecting the Time-Flow, and refusing to succumb to Professional Atrophy, we aren’t just surviving the AI age.

We are architecting it.

-

The Simplifier

Moving from Feature-Dumping to Value Engineering

If you’ve spent any time in Product Management, you know the “Feature Trap.” It’s that moment in a sales pitch where the presenter lists 50 things the product can do, hoping one of them sticks.

In my experience—whether dealing with the heavy machinery of Auto Ancillaries or the complex architecture of IT Hardware—I’ve found that buyers don’t want more features. They want less friction.

They want a Simplifier.

The “Spaghetti” Workflow

Most businesses operate on what I call “Spaghetti Workflows.” Over years of growth, processes become tangled. Procurement teams are bogged down by “clutter” (the 80% of routine tasks), and sales teams are bogged down by “manual chasing.”

The future sales pitch isn’t a demonstration of your product; it is a diagnostic of their spaghetti. Architecture over Persuasion When I talk about Value Engineering, I’m talking about using AI to deconstruct the prospect’s current state. The future pitch sounds like this:

“I’ve analyzed your current time-flow. You are spending 40 hours a week on manual quote reconciliation that sits within a predictable 5% variance. My goal is to automate that ‘clutter’ so your team can focus on the 20% of strategic sourcing that actually drives your margin.”

This is the Simplifier in action. You aren’t asking for a sale; you are proposing a structural redesign of their day.

The “Drake Variance” in Sales

In my research on the Variance Corridor, I focus on how buyers filter out noise. As a salesperson, you must use this logic in reverse.

By showing the buyer that your pricing is built on a transparent cost-discovery model, you move your pitch into their “Optimal Zone.” You aren’t “negotiating” a price; you are “engineering” one. You are telling the buyer: “We’ve removed the fluff. This is the true cost of value.”

Why the Simplifier Wins

- It respects Time-Flow: You aren’t adding to their “to-do” list; you are taking things off it.

- It bridges the Trust Deficit: Transparency in cost-modeling is the ultimate trust-builder.

- It prevents Atrophy: By automating the “boring 80%,” you are actually selling the buyer’s team their own intelligence back. You are giving them the time to be strategic again.

The New Bottom Line

The “Simplifier” doesn’t just close deals; it builds Empires. It creates a relationship where the salesperson is seen as a consultant-architect rather than a vendor.

-

The Death of the Sales-Script. (The Conflict)

Why the “Smooth Talker” Era is Ending in the Age of AI

In my 12 years navigating the sales & product ownership, from heavy industrial Oil & Gas to precision IT Hardware, I’ve sat on both sides of the table. I’ve delivered the pitches, and I’ve scrutinized the quotes.

If there is one thing I’ve learned, it’s this: The “Smooth Talker” is becoming a liability.

For decades, sales was built on the “Script.” We were taught to pivot, to handle objections with pre-set decks, and to “ABC” (Always Be Closing). But as we enter 2026, that era isn’t just fading—it’s being dismantled by the very technology we thought would save it.

The “Plastic” Problem

We are currently drowning in “AI-generated” outreach. My LinkedIn inbox is a graveyard of perfectly punctuated, yet utterly hollow, sales pitches. These are scripts written by bots, for bots. It has created a Trust Deficit.

When a Buyer—especially a sophisticated one—encounters a pitch that feels “plastic,” they don’t just ignore the email; they lose respect for the brand. Why? Because a script signals that you haven’t done the heavy lifting of understanding their specific friction.

The Rise of the “Variance Corridor”

The modern buyer is getting smarter. As I’ve been developing in my own research on the Variance Corridor, strategic procurement is moving away from “Lowest Price” and toward “Structural Logic.” If a buyer is using AI to filter out quotes that don’t make mathematical sense, your “charming” sales script won’t save you. If your pitch sits outside the corridor of reality—either too cheap to be sustainable or too expensive to be justifiable—the machine flags you as “clutter” before you even get a meeting.

From Scripting to Architecting

The salespeople who will build empires in the next five years aren’t the ones with the best vocabulary; they are the ones who can architect a solution in real-time. They don’t follow a script; they follow a “Simplifier” logic. They look at a prospect’s messy, “spaghetti” workflow and use AI to deconstruct it, showing exactly where the time-flow is blocked.

The shift is simple but brutal: Old Sales: “Trust me because I sound confident.”

- New Sales: “Trust me because the data-driven architecture of this deal is transparent.”

The “Professional Atrophy” Warning

The danger for all of us—myself included—is leaning too hard on the automation. If we let the AI write our scripts, our own intuition for the “human nuance” withers away. I call this Professional Atrophy.

If you stop practicing the art of the “Deep Dive,” you won’t be able to handle the 20% of problems that AI can’t solve—the “Black Swan” events, the complex interpersonal dynamics, and the unprecedented market shifts.

The Bottom Line

The script is dead because the buyer can finally see through it. The future belongs to the Synthesizer—the leader who uses AI to handle the data-drudgery so they can spend 100% of their human energy on building real, data-backed trust.

-

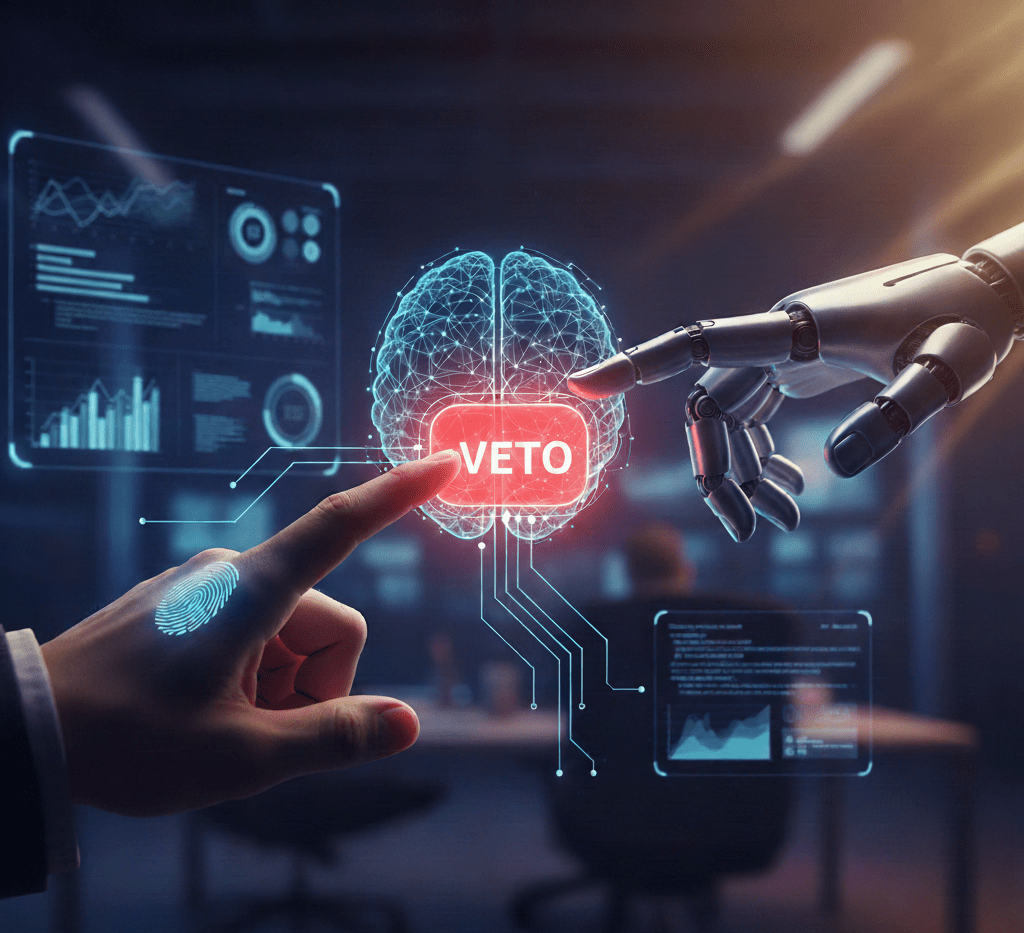

Building a Human Checkpoint — Recalibrating Trust.

Here is the framework for a “Human Veto” process:

1. The “Blind Spot” Audit (Contextual Validation)

AI is excellent at processing the data it has, but it is “blind” to everything else.

- The Action: Before accepting an AI result, ask: “What is the AI not seeing?” * The Detail: This means looking for “off-page” factors like current office politics, a client’s recent emotional state, or sudden market shifts that haven’t hit the data sets yet. If the AI suggests a strategy based on last month’s data, the human checkpoint ensures it still makes sense this morning.

2. Cross-Verification via Non-Digital Sources (The Reality Check)

We have a habit of checking digital data with more digital data, which can create an echo chamber.

- The Action: Mandate a “triangulation” step using a human or physical source.

- The Detail: If an AI analysis says a project is on track, don’t just check the dashboard. Pick up the phone. A 30-second conversation with a project lead (a “non-digital source”) can reveal nuances—like team burnout or a vendor delay—that a spreadsheet will never capture.

3. The “Inversion” Test (Checking for Bias)

Algorithms often take the path of least resistance, which can lead to repetitive or biased outcomes.

- The Action: Purposefully argue against the AI’s recommendation.

- The Detail: If the AI flags a specific candidate as the “best fit,” the human checkpoint requires you to ask: “Why might this recommendation be wrong?” or “What would the ‘opposite’ of this recommendation look like?” This forces the professional to use their critical thinking muscles rather than just hitting “Approve.”

Don’t let the speed of AI outrun your common sense. Build your checkpoints today.

-

Subscribe

Subscribed

Already have a WordPress.com account? Log in now.